Takato Yoshikawa, Yuki Endo, Yoshihiro Kanamori

University of Tsukuba

VISAPP 2024

Best Student Paper Award

Takato Yoshikawa, Yuki Endo, Yoshihiro Kanamori

University of Tsukuba

VISAPP 2024

Best Student Paper Award

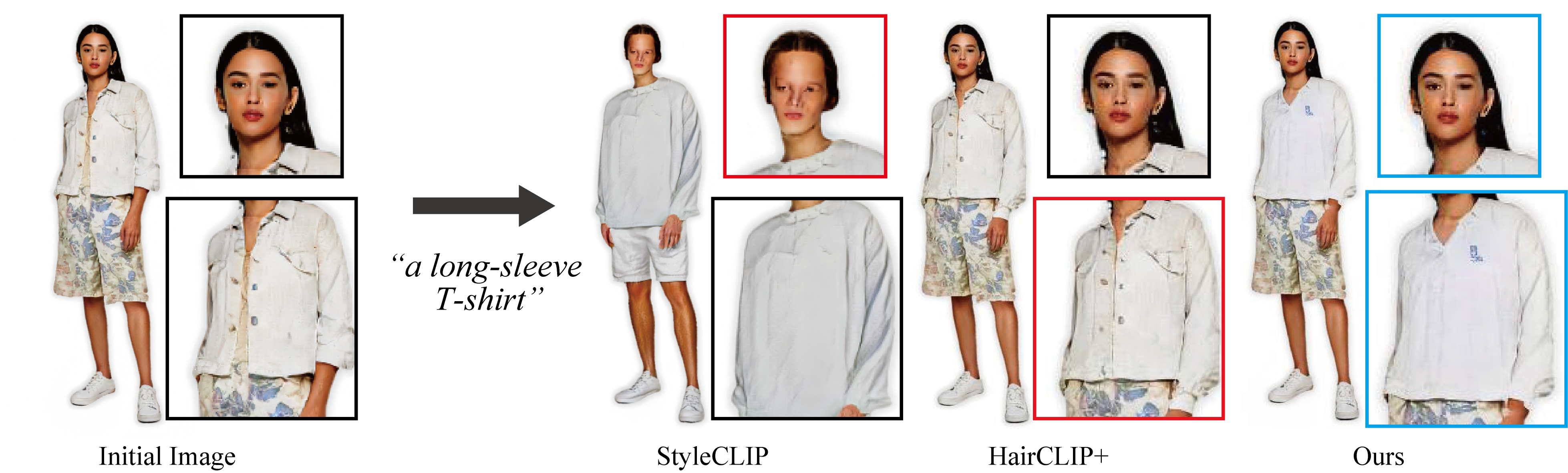

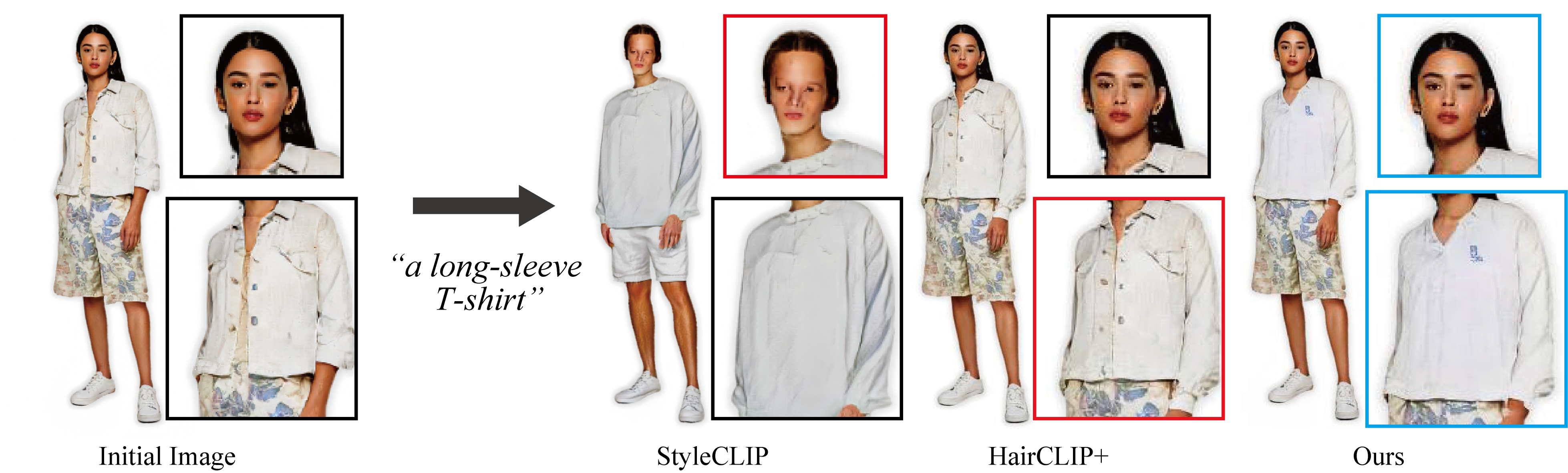

This paper tackles text-guided control of StyleGAN for editing garments in full-body human images. Existing StyleGAN-based methods suffer from handling the rich diversity of garments and body shapes and poses. We propose a framework for text-guided full-body human image synthesis via an attention-based latent code map- per, which enables more disentangled control of StyleGAN than existing mappers. Our latent code mapper adopts an attention mechanism that adaptively manipulates individual latent codes on different StyleGAN lay- ers under text guidance. In addition, we introduce feature-space masking at inference time to avoid unwanted changes caused by text inputs. Our quantitative and qualitative evaluations reveal that our method can control generated images more faithfully to given texts than existing methods.

Keywords: StyleGAN; Text-guided Garment Manipulationl; Full-body Human Image;

@article{YoshikawaVISIGRAPP24,

author = {Takato Yoshikawa and Yuki Endo and Yoshihiro Kanamori},

title = {StyleHumanCLIP: Text-guided Garment Manipulation for StyleGAN-Human},

booktitle = {International Conference on Computer Vision Theory and Applications (VISAPP 2024)},

year = {2024}

}

Last modified: Feb 2024

[back]